- July 08, 2024

- By Georgia Jiang

A team led by University of Maryland computer scientists has invented a camera mechanism that improves how robots see and react to the world around them. Inspired by how the human eye works, its innovative camera system mimics the tiny involuntary movements used by the eye to maintain clear and stable vision.

The team’s prototyping and testing of the camera—called the Artificial Microsaccade-Enhanced Event Camera (AMI-EV)—was detailed in a paper published recently in Science Robotics.

Event cameras are a relatively new technology better at tracking moving objects than traditional cameras, but today’s event cameras struggle to capture sharp, blur-free images when a lot of motion is involved, said the paper’s lead author, Botao He, a computer science Ph.D. student at UMD.

“It’s a big problem because robots and many other technologies—such as self-driving cars—rely on accurate and timely images to react correctly to a changing environment,” He said. “So, we asked ourselves: How do humans and animals make sure their vision stays focused on a moving object?”

For He’s team, the answer was microsaccades, the minute and quick eye movements that occur without conscious thought to help the human eye focus on objects and visual textures—such as color, depth and shadowing.

“We figured that just like how our eyes need those tiny movements to stay focused, a camera could use a similar principle to capture clear and accurate images without motion-caused blurring,” He said.

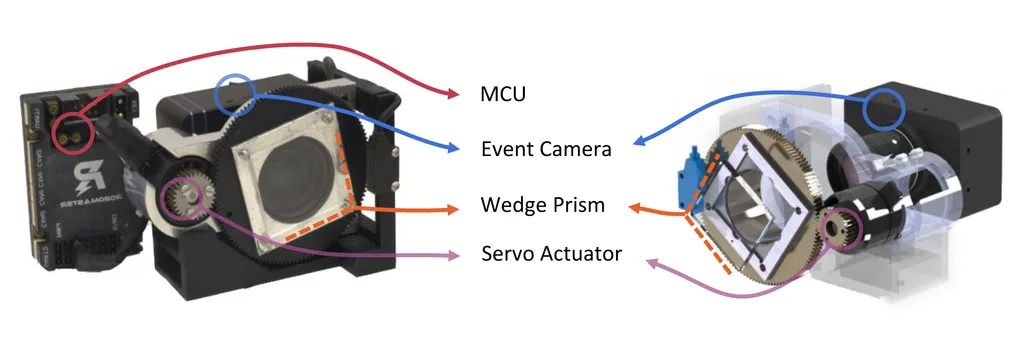

The team successfully replicated microsaccades by inserting a

rotating prism inside the AMI-EV to redirect light beams captured by the

lens. The continuous rotational movement of the prism simulated the

movements naturally occurring within a human eye, allowing the camera to

stabilize the textures of a recorded object just as a human would. The

team then developed software to compensate for the prism’s movement

within the AMI-EV to consolidate stable images from the shifting lights.

Study co-author Yiannis Aloimonos, a professor of computer science at

UMD, called the team’s invention a big step forward in the realm of

robotic vision.

“Our eyes take pictures of the world around us, and those pictures are

sent to our brain, where the images are analyzed. Perception happens

through that process and that’s how we understand the world,” explained

Aloimonos, who is also director of the Computer Vision Laboratory at the

University of Maryland Institute for Advanced Computer Studies

(UMIACS). “When you’re working with robots, replace the eyes with a

camera and the brain with a computer. Better cameras mean better

perception and reactions for robots.”

The researchers also believe that their innovation could have

significant implications beyond robotics. Scientists working in

industries that rely on accurate image capture and shape detection are

constantly looking for ways to improve their cameras—and AMI-EV could be

the key solution to many of the problems they face.

With their unique features, event sensors and AMI-EV are poised to take

center stage in the realm of smart wearables, said research scientist

Cornelia Fermüller, senior author of the paper.

“They have distinct advantages over classical cameras—such as

superior performance in extreme lighting conditions, low latency and low

power consumption,” she said. “These features are ideal for virtual

reality applications, for example, where a seamless experience and the

rapid computations of head and body movements are necessary.”

In early testing, AMI-EV was able to capture and display movement

accurately in a variety of contexts, including human pulse detection and

rapidly moving shape identification. The researchers also found that

AMI-EV could capture motion in tens of thousands of frames per second,

outperforming most typically available commercial cameras, which capture

30 to 1000 frames per second on average. This smoother, more realistic

depiction of motion could prove to be pivotal in anything from creating

more immersive augmented reality experiences and better security

monitoring to improving how astronomers capture images in space.

“Our novel camera system can solve many specific problems, like helping a

self-driving car figure out what on the road is a human and what

isn’t,” Aloimonos said. “As a result, it has many applications that much

of the general public already interacts with, like autonomous driving

systems or even smartphone cameras. We believe that our novel camera

system is paving the way for more advanced and capable systems to come.”