- April 08, 2020

- By Maria Herd M.A. ’19

From detecting illegal stock market activity to increasing safety in self-driving vehicles to improving facial ID recognition, machine learning systems promise to revolutionize a range of security applications—and now a team of University of Maryland computing experts is working to ensure adversaries can't penetrate them.

A new $3.1 million grant from the Defense Advanced Research Projects Agency (DARPA) will support efforts to develop safeguards against deception attacks on machine learning algorithms, which let systems learn from data and make decisions with a minimum of human programming. Attacks against these systems are an emerging security threat as artificial intelligence (AI) is further applied to industrial settings, medicine, information analysis and more.

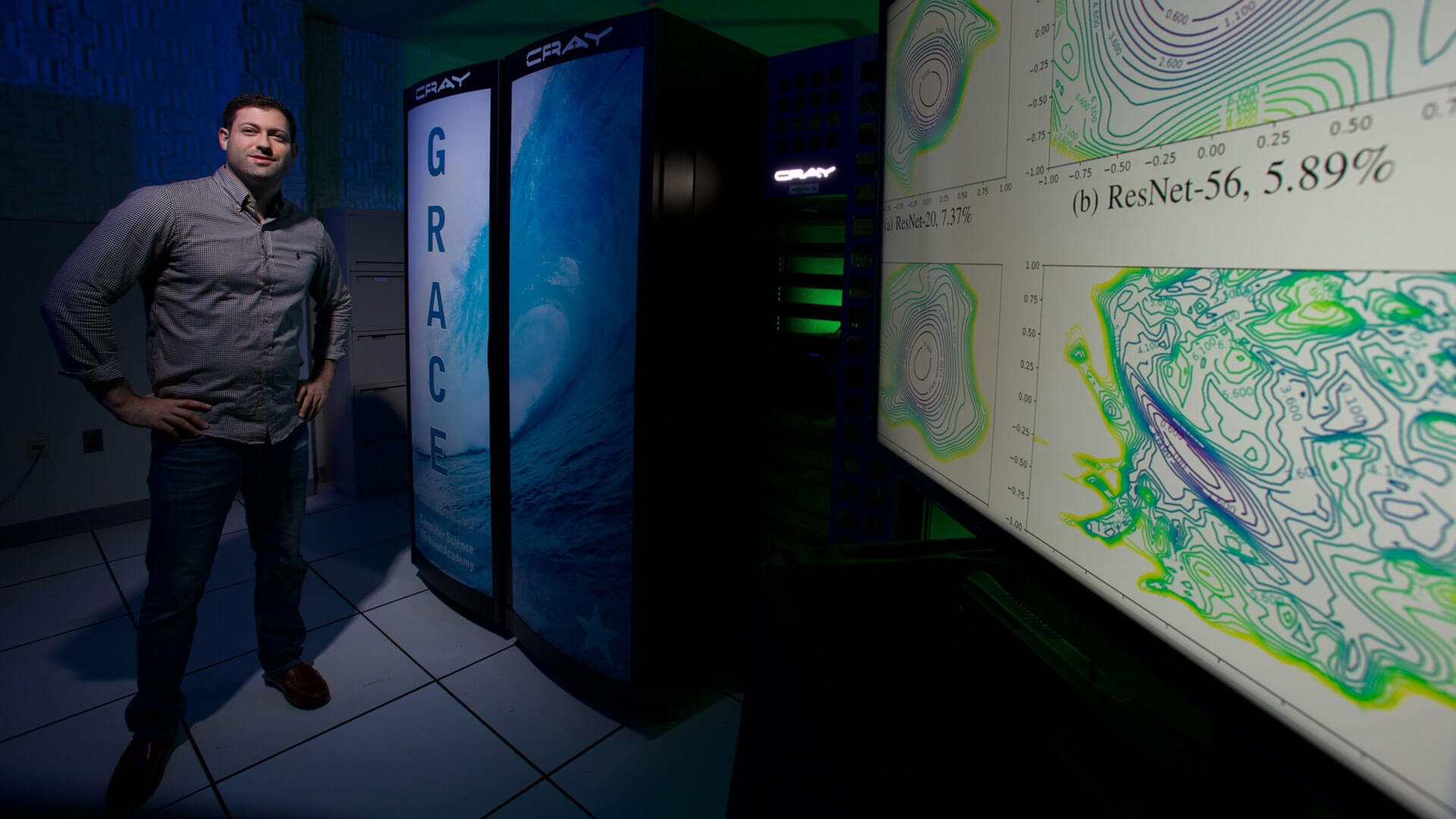

“We want to understand the vulnerabilities of these systems, craft defenses that would make it difficult to attack them, and improve the overall security of these systems,” said Tom Goldstein, an associate professor of computer science, who is principal investigator of the four-year project.

The research will encompass a wide range of systems, including some that have not yet been studied, like copyright detection and stock market bots.

“As far as I’m aware, no one has actually looked at the security of machine learning for those applications,” said Goldstein. “We’re the first people to do it.”

“As far as I’m aware, no one has actually looked at the security of machine learning for those applications,” said Goldstein. “We’re the first people to do it.”

Goldstein’s partners in the research project are Assistant Professors John Dickerson, Furong Huang and Abhinav Shrivastava and Professor David Jacobs—all in the Department of Computer Science—and computer science Professor Jonathan Katz of George Mason University.

Collectively, the researchers bring to bear expertise in machine learning, AI, cybersecurity and computer vision. All have appointments in the University of Maryland Institute for Advanced Computer Studies, where Dickerson, Goldstein, Huang and Jacobs are also core faculty members of the University of Maryland Center for Machine Learning.

The goals of the DARPA project are threefold, Goldstein said. The first is to improve a variety of defense systems against evasion attacks, when small changes are made to the inputs of a machine learning system that enable an attacker to take control of the outputs, and poisoning, when an attacker makes subtle changes to the dataset with the goal of eliciting damaging behavior in models trained on that dataset.

Vulnerable systems include copyright and terrorist-detection systems, supply chain management in market-based systems, and systems that authenticate 3D and high-resolution images, video and audio, like fake news detectors.

“The idea is to come up with better defenses for these kinds of systems and then eventually implement them,” said Goldstein.

Creating defenses with both empirical strength and certifiable guarantees is the researchers’ second goal. In other words, they will mathematically prove that that the security systems they design cannot be penetrated by certain attacks.

The team’s third goal will build on prior research by Goldstein and Dickerson to enhance the theoretical understanding of the vulnerabilities and defense of these systems.

The UMD project is part of DARPA’s Guaranteeing AI Robustness against Deception (GARD) program, launched in 2019 to address vulnerabilities inherent in machine learning platforms that are deemed critical to the U.S. infrastructure.